This article is dedicated once again to a small self-creation. So it’s about a little tool of mine, which I have created acouple of days ago, as in most cases, out of necessity. So I was recently looking for a opportunity to check a large list of links as easy as possible to identify the dead links out of it.

This article is dedicated once again to a small self-creation. So it’s about a little tool of mine, which I have created acouple of days ago, as in most cases, out of necessity. So I was recently looking for a opportunity to check a large list of links as easy as possible to identify the dead links out of it.

The initial situation

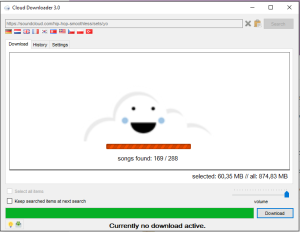

At least if you have to check 20 or more links for their validity by hand, you realize that ther must be a better and faster solution. Therefore I have written a small program in C#, which helps you to check as many links/urls as you like for their validity. I have named the tool “404Checkr”, whereby 404 refers to the HTTP statuscode 404, which says that a page/file could not be found.

What does the 404Checkr?

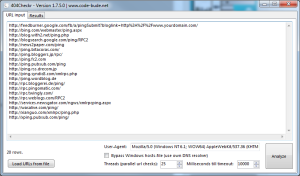

The 404Checkr can handle an arbitrarily large list of links. These can be entered either manually or via copy and paste. After that you can choose the amount of threads. This means practically, how many pages are checked in parallel. The ideal value for this is always depending on the quality and speed of the users internet connection.

Next you can set the number of seconds which is needed before a timeout error is raised. This can be useful if you want to have only sites classified as “OK”, which answer within a certain time. Depending on your requirements, a page may be technically ok but practically unusable when it answers not unti after 15-20 seconds.

In addition, the user-agent can be specified and for the experts an alternative DNS service can be activated. In that case the IPs of the hosts won’t be determined by the Windows DNS system but by the 404Checkrs custom system.

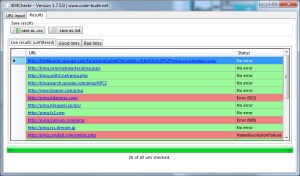

If these settings are made, the analysis of the links can be started with one click. The current progress of the review process can be monitored bya progressbar and a text-based output. Although the result list refreshes in real-time.

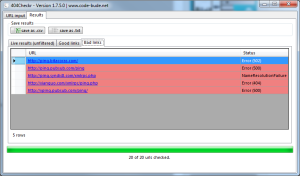

When testing is completed, the results can be exported either as simple .txt-document or as a .csv-file. It is possible to select whether all links or only the good / bad should to be exported.

Download and instructions

Of course you can download and use the software (404Checkr) for free. Should you however want to use it commercially (for example in your company), so I would appreciate that you contact me or send me at least a small donation via Paypal.

Download: 404Checkr (v. 1.7.5.0)

Screencast – 404Checkr live!

Source code and developer information

Contrary to some of my other projects, I’m also providing you with the source code of 404Checkr. You are welcome to add new functions or work on the performance. Should you actually build something new from the project, I’d appreciate a comment or an email. (I, for myself, always like to see what others have for ideas that you didn’t come to my mind. Moreover, sometimes there are arinsing really interesting discussion out of such situations.)

Two more notes before I’ll give you the download link for the Visual Studio project.

- As I said, you are allowed to create something new from the existing project. What I do not like is when you only replace my name and add yours or your logo and then tell everybody that the program was “your genious idea”. I say this aware at this point, since I often times get such requests lately.

- Do not expect too much from the sources. The program is basically built between two coffee cups. If you get lost in the code, I can not be held responsible for. ;)

Hi, I am have used your program to check my click tags which I use to track my social media ads. I did not understand the results.

All the links failed when the option – bypass windows hosts-file was not selected.

bad link status – secure channel failure

And when I selected the option to bypass windows hosts-file all the links have passed the test with the status message- too many redirects (>10).

Does this mean my URLs are working? Or not redirecting to a blank page??

Great and neat toll I really needed.

Works! Thanks!

Thank you very much for this useful tool.It saved me tons of time!!!Appreciated.

Worked fine for me just now – 5000+ URLS, average Internet connection.

Thanks Raffi!

Program hangs on large URL list (>10 000 urls). Scr: http://savepic.su/7582550.png

Debugger shows multiple null reference exceptions.

Steps to reproduce: just scan large URL list.

OS: Win7 x64

Tested on this list: http://pastebin.com/QnMSq3gB

1000 urls – ok

10 000 urls – hang.

Great tool btw, i figured the problem by modifying the source.

Thanks to author of the tool for open source!

Can you share what you did to modify the source? Thanks!

I’m interested in this, too, since I can’t find the error myself, because the problem isn’t replicateable on my computer.

sharing the fixed exe would be great

Still unfixed, 2 years later, looks like abandonware.

check one url & it freezes with an eternal waiting message that ends with a “app killing method”.

it may happen if you check it a second time.

Can you send me a link to 1.5.0 ?

thanks

Hey Rob,

before “crying” that something is still unfixed, rather try to help to fix it. I just checked the program 10 minutes ago. Entered one url and it ran without problems. So I don’t know what I should fix.

Could you please write down the steps, which lead to the error? I need url and the clicks you did. (And also the user-agent you entered.)

Best regards,

Raffael

Great tool, thanks

I had over 1700 URLs to check and your tool knocked them out in just over 10 minutes without getting blocked or shut out by the website firewall. I reduced the thread to just 7 to help avoid this. Thanks again for such a cool tool.

Hello,

Can you please implement a Proxy Authentication?

Thanks =)

Klasse Tool. Genau was ich gesucht habe. Vielen Dank!

Thanks Raffi…

Hello,

There are couple of errors.

(Win7 x64)

When I switch to tab “Good (or Bad) links” and click the link – I’ve been redirected to link at same row from first tab.

Also got false positives while using DNS resolver (‘No errors’ where 404 actually was)

Need user-agent!!

Do you mean an option, where you can set up the user-agent of the 404checker?

Yess :)

Hey Alex,

have a look at the blogpost. ;-) There’s a new version with some new features. (Custom DNS, useragent selection and good/bad result lists.)

oh, super :)

Thanks)))

Thanks a lot, work great !

Thanks Raffi! I searched far and wide on Google, and then I stumbled upon this awesome tool…works well.

Hey Raffi,

I got your email about the 404 Checkr, I sent you a response, did you get my response?

Thank you so much!

Tim

Hey rafi,

I like your link checker a lot, I want to pay you for additional features.can you please contact me? my contact email is in the contact field.

thankyou..works like a charm